However, the MMOD implementation in dlib used HOG feature extraction followed by a single linear filter. This means it's incapable of learning to detect objects that exhibit complex pose variation or have a lot of other variability in how they appear. To get around this, users typically train multiple detectors, one for each pose. That works OK in many cases but isn't a really good general solution. Fortunately, over the last few years convolutional neural networks have proven themselves to be capable of dealing with all these issues within a single model.

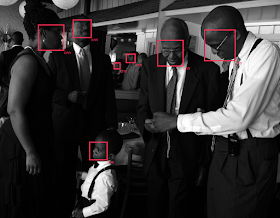

So the obvious thing to do was to add an implementation of MMOD with the HOG feature extraction replaced with a convolutional neural network. The new version of dlib, v19.2, contains just such a thing. On this page you can see a short tutorial showing how to train a convolutional neural network using the MMOD loss function. It uses dlib's new deep learning API to train the detector end-to-end on the very same 4 image dataset used in the HOG version of the example program. Happily, and very much to the surprise of myself and my colleagues, it learns a working face detector from this tiny dataset. Here is the detector run over an image not in the training data:

I expected the CNN version of MMOD to inherit the low training data requirements of the HOG version of MMOD, but working with only 4 training images is very surprising considering other deep learning methods typically require many thousands of images to produce any kind of sensible results.

The detector is also reasonably fast for a CNN. On the CPU, it takes about 370ms to process a 640x480 image. On my NVIDIA Titan X GPU (the Maxwell version, not the newer Pascal version) it takes 45ms to process an image when images are processed one at a time. If I group the images into batches then it takes about 18ms per image.

To really test the new CNN version of MMOD, I ran it through the leading face detection benchmark, FDDB. This benchmark has two modes, 10-fold cross-validation and unrestricted. Both test on the same dataset, but in the 10-fold cross-validation mode you are only allowed to train on data in the FDDB dataset. In the unrestricted mode you can train on any data you like so long as it doesn't include images from FDDB. I ran the 10-fold cross-validation version of the FDDB challenge. This means I trained 10 CNN face detectors, each on 9 folds and tested on the held out 10th. I did not perform any hyper parameter tuning. Then I ran the results through the FDDB evaluation software and got this plot:

The X axis is the number of false alarms produced over the entire 2845 image dataset. The Y axis is recall, i.e. the fraction of faces found by the detector. The green curve is the new dlib detector, which in this mode only gets about 4600 faces to train on. The red curve is the old Viola Jones detector which is still popular (although it shouldn't be, obviously). Most interestingly, the blue curve is a state-of-the-art result from the paper Face Detection with the Faster R-CNN, published only 4 months ago. In that paper, they train their detector on the very large WIDER dataset, which consists of 159,424 faces, and arguably get worse results on FDDB than the dlib detector trained on only 4600 faces.

As another test, I created the dog hipsterizer, which I made a post about a few days ago. The hipsterizer used the exact same code and parameter settings to train a dog head detector. The only difference was the training data consisted in 9240 dog heads instead of human faces. That produced the very high quality models used in the hipsterizer. So now we can automatically create fantastic images such as this one :)

|

| Barkhaus dogs looking fancy |

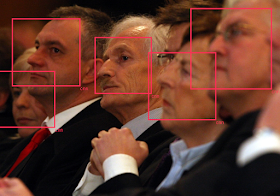

As one last test of the new CNN MMOD tool I made a dataset of 6975 faces. This dataset is a collection of face images selected from many publicly available datasets (excluding the FDDB dataset). In particular, there are images from ImageNet, AFLW, Pascal VOC, the VGG dataset, WIDER, and face scrub. Unlike FDDB, this new dataset contains faces in a wide range of poses rather than consisting of mostly front facing shots. To give you an idea of what it looks like, here are all the faces in the dataset tightly cropped and tiled into one big image:

Using the new dlib tooling I trained a CNN on this dataset using the same exact code and parameter settings as used by the dog hipsterizer and previous FDDB experiment. If you want to run that CNN on your own images you can use this example program. I tested this CNN on FDDB's unrestricted protocol and found that it has a recall of 0.879134, which is quite good. However, it produced 90 false alarms. Which sounds bad, until you look at them and find that it's finding labeling errors in FDDB. The following image shows all the "false alarms" it outputs on FDDB. All but one of them are actually faces.

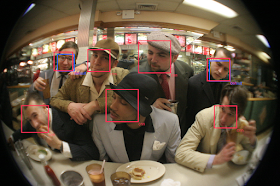

Finally, to give you a more visceral idea of the difference in capability between the new CNN detector and the old HOG detector, here are a few images where I ran dlib's default HOG face detector (which is actually 5 HOG models) and the new CNN face detector. The red boxes are CNN detections and blue boxes are from the older HOG detector. While the HOG detector does an excellent job on easy faces looking at the camera, you can see that the CNN is way better at handling not just the easy cases but all faces in general. And yes, I ran the HOG detector on all the images, it's just that it fails to find any faces in some of them.

That's quite impressive, nice work. Any plans for a Python binding?

ReplyDeleteWow. Really good work.

ReplyDeleteThe API makes heavy use of C++11, so no general Python binding is possible. Although I might make very narrow bindings to specific models at some point.

ReplyDeleteAwesome job!!

ReplyDeleteThanks! :)

ReplyDeleteThanks, it is awesome!!!

ReplyDeleteThis is a good deep learning library for c++ programmers, it is easy to use(nice, clean api), support gpgpu, provides nice examples and ease to build, it is like a dream come true.

This is incredible, thanks for sharing your great work!

ReplyDeleteI think I see two non-faces, the "4" jersey, but also the square just above it.

I was about to try this out, but I'm having some growing pain building dlib on my OS X 10.11 laptop (1. using Anaconda creates X11 problems, I think I figured that out 2. I got CUDA 8.0 built with Xcode 7.3 and I've been using it Python, dlib finds CUDA, but says it can't compile anything and can't find cuDNN). If I have time to dig into these issues more and can provide more precise info I'll post an issue on GitHub.

Thanks.

ReplyDeleteThe thing above the 4 is a wooden carving of some guy's head, which is a little hard to see from the crop.

Thanks for the latest update. Finally able to run the deep learning example with Visual Studio 2015.

ReplyDeleteThe original file names from MNIST data set contain '.' while you hard coded '-'.

No problem.

ReplyDeleteI'm looking at the MNIST site (http://yann.lecun.com/exdb/mnist/) and the files don't have any . in them.

What is the test time performance compared to HOG based detector?

ReplyDeleteIf you run the CNN on the GPU then it's about the same speed as HOG (which only runs on CPU)

ReplyDeleteGreat work!!

ReplyDeleteI have a question about the receptive field you mention in the code comment. You mention it is a little above 50x50, but I'm seem not able to reproduce this size, I end up with a receptive field of 117x117 pixels.

Best regards

Well, the first few layers downsample the image by a factor of 8 so you can think of it as turning it into 8x8 cells. I realize the cells have receptive fields that are larger than 8x8, but most of the action of their focus is in a smaller area. Then the detector pulls a 6x6 grid of them at the end, after some filtering.

ReplyDeleteI should probably change the wording of the example to be a little less confusing :)

Thank you for the explanation, it helps my understanding to how to change the network for other objects. The results of experiments I did so far with the face model are remarkable. Keep on the good work ;).

ReplyDeleteI have another question though. I am trying to train a pedestrian model of 22x50. I have altered all of the annotations to more or less this aspect ratio (rounded dimention values). When training with these annotations, I get the error "Encountered a ground truth rectangle with a width and height of 71 and 90...aspect ratio is to different from the detection window", although these dimentions are not present in the annotation file.

Are the ground truth annotations cropped somehow when they overlap with other annotations or the image boundary maybe? And will this problem still appear for 'ignored' annotations?

Best regards

No problem. Glad you like dlib :)

ReplyDeleteNo, there isn't any cropping or anything like that. You must have a box in your dataset that you don't expect. If that error says it's there then it's there.

Hi,

ReplyDeleteI just finished reading your MMOD paper and it is a brilliant concept. I am curious how the margin based optimization of MMOD is combined with gradient descent based optimization of neural nets. Is there more material about the convnet version I can read somewhere ?

Thanks. The convnet version literally just optimizes the same objective function as in the MMOD paper. Except instead of using a cutting plane solver it uses vanilla SGD.

ReplyDeleteGreat work! I am trying your code with CUDA 8.0 (Ubuntu 16.04 - GeForce GT 520), but when I ran the dnn_mmod_ex program I got this error:

ReplyDeleteError while calling cudnnCreate(&handles[new_device_id]) in file /home/ale/dlib-19.2/dlib/dnn/cudnn_dlibapi.cpp:102. code: 6, reason: A call to cuDNN failed

Can you help me to solve this issue?

Thanks!

I don't know what's wrong. You probably have something wrong with your computer or cuda install.

ReplyDelete@florisdesmedt -- I saw the same issue when training an object detector that is rectangular (2x1 aspect ratio). I believe the issue is the random crop rotation. After rotation (e.g., at a 45 degree angle) the region is no longer rectangular. It's basically at a 1x1 aspect ratio, and the training fails. This isn't really a problem for square things, since 45 degree rotation, and it's still within the tolerance. I reduced the random crop rotation to 5 degrees (from default 30) and it worked:

ReplyDeletecropper.set_max_rotation_degrees(0);

This comment has been removed by the author.

Delete@ Davis, great job. I see that you use "num of false alarms v.s. recall" figure to compare fasterRCNN, dlib MMOD CNN, and Violajones.

ReplyDeleteMay I know if you have similar figures to compare fasterRCNN, dlib MMOD CNN and dlib HOG?

@ Davis, great job. I see that you use "num of false alarms v.s. recall" figure to compare fasterRCNN, dlib MMOD CNN, and Violajones.

ReplyDeleteMay I know if you have similar figures to compare fasterRCNN, dlib MMOD CNN and dlib HOG?

@ Davis, great job. I see that you use "num of false alarms v.s. recall" figure to compare fasterRCNN, dlib MMOD CNN, and Violajones.

ReplyDeleteMay I know if you have similar figures to compare fasterRCNN, dlib MMOD CNN and dlib HOG?

The MMOD paper has some more such plots on the FDDB dataset, including one for HOG.

ReplyDeleteThis is awesome!! I tried the dog hipsterizer with CPU mode and it works pretty well... I was trying to train a detector from scratch. I compiled dlib with cudnn library

ReplyDeleteI used:

> g++ -std=c++11 -O3 -I.. ../dlib/all/source.cpp -lpthread -lX11 -lcudnn -ljpeg -lpng -L/~/external/cuda/lib64/ -D DLIB_JPEG_SUPPORT dnn_mmod_ex.cpp

it generated a binary but I don't see any speed improvement compared with the CPU mode. How can you compile the example?

Thanks for sharing

You didn't compile any of the CUDA code. You have to use the nvidia nvcc compiler and do a number of other things. You should use cmake to compile the project, it will do all the right stuff.

ReplyDeleteHi, how could we reuse trained network to extract features from images and then train our own standard machine learning classifier (such as softmax or SVM). Like the overfeat example of sklearn_theano.Thanks

ReplyDeleteYes, you can get the output of the network's last layer, or any other layer. Read the documentation to see how. In particular, there are two introduction examples that are important to read to understand the API.

ReplyDelete>there are two introduction examples that are important to read to understand the API.

ReplyDeleteDo you mean dnn_introduction1_ex.cpp and dnn_introduction2_ex.cpp?

Please correct me if I am wrong. We can use

layer(pnet).get_output();

to extract the features from images and use it to train our own classifier?

Do you have any plan on providing more pre-train network?Like the network of Deep face recognition used?We could implement face descriptor based on it if it exist.

Forgive me if I ask anything stupid.

Yes, those introductions. There is also an entire API reference http://dlib.net/faq.html#Whereisthedocumentationforobjectfunction

ReplyDeleteI'm going to be adding more things to the deep learning toolkit for the foreseeable future. That will probably include more examples with pretrained models.

I just used dlib to train a neural network for the first time using this library. It was super easy. A lot of the nonsense you have to deal with in other libraries is taken care of for you automatically. Plus the code has basically 0 dependencies, I can deploy it in an embedded device (No Python, lua, lmdb, or a whole menu of other stuff). I've already said this once before, but really *great* job on this library. Dlib deserves a lot more attention as a first-class neural net library.

ReplyDeleteThanks :)

ReplyDeleteYeah, I wanted to make a library that professionals would want to use. Most of the other tools are obviously written by grad students who don't know how to code well. Dlib will also automatically adjust the learning rate as well as detect convergence, and so knows when to stop. So there shouldn't be much fiddling with learning rates. Just run it and wait. Although you do need to set the "steps without progress threshold", but setting it to something big like 10,000 or 8,000 should always be fine. I have it set to something smaller in the examples just so they run faster.

Hi, I have a question about dnn_introduction_ex.cpp.

ReplyDeleteusing net_type = loss_multiclass_log<

fc<10,

relu>

>>>>>>>>>>>>;

Why the first fc output size is 120 but not 784?

Size of the mnist dataset is 28*28.

I check the source codes of max_pool, by default it prefer zero padding.

first max_pool 28*28 become 14*14

second max_pool 14*14 become 7*7

final size should be 7*7*16 = 784

I think you are confusing input sizes with output sizes.

ReplyDelete>I'm going to be adding more things to the deep learning toolkit for the foreseeable future. That will probably include more examples with pretrained models.

ReplyDeleteThanks, looking forward to it.

Do you have any plan to support parallel programming on cpu in the future?

Which part is the most time consuming part of cpu?

Maybe unfold some for loops can make cpu part become faster

Hi, Kyle McDonald, I have the same issue as yours, I use a brute force solution to fix this issue

ReplyDeleteI change the line

"if (CUDA_FOUND AND cudnn AND cudnn_include AND COMPILER_CAN_DO_CPP_11 AND cuda_test_compile_worked AND cudnn_test_compile_worked)"

to

"if(1)".

Then everything work as expected(I confirm cuda and cudnn work on my machine before I change it to if(1)).

But to make the program compile, I need to link to

cublas.lib

cublas_device.lib

cudnn.lib

curand.lib

and make sure my program know where to locate

cublas64_80.dll

cudart64_80.dll

cudnn64_5.dll

curand64_80.dll

Following are the time comparison of cpu vs gpu

CPU : elapsed time: 13213s

GPU : elapsed time: 528s

It is around 25 times faster than cpu.

I enable arch:AVX when I use cpu to do the training, but I think there are still room to improve the speed, because I notice dlib only leverage one cpu to train the network.

You said the error was this:

ReplyDelete*** cuDNN V5.0 OR GREATER NOT FOUND. DLIB WILL NOT USE CUDA. ***

*** If you have cuDNN then set CMAKE_PREFIX_PATH to include cuDNN's folder.

So it's not finding cudnn. Did you set CMAKE_PREFIX_PATH to include cuDNN's folder so cmake can find it?

Good stuff.

ReplyDeleteI am trying to use the program to detect my palm instead of my face. I have given it a total of 4 images (3 training and 1 testing) just to see what happens. I get the following error

num training images: 3

num testing images: 1

detection window width,height: 34,47

overlap NMS IOU thresh: 0

overlap NMS percent covered thresh: 0

done training

training results: 1 0 0

testing results:

Error detected at line 31.

Error detected in file filetree/dlib/dlib/../dlib/dnn/validation.h.

Error detected in function const matrix dlib::test_object_detection_function(loss_mmod &, const image_array_type &, const std::vector > &, const dlib::test_box_overlap &, const double) [SUBNET = dlib::add_layer, dlib::add_layer, dlib::add_layer, dlib::add_layer, dlib::add_layer, dlib::add_layer, dlib::add_layer, dlib::add_layer, dlib::add_layer, dlib::add_layer, dlib::add_layer, dlib::add_layer, dlib::add_layer, dlib::input_rgb_image_pyramid >, void>, void>, void>, void>, void>, void>, void>, void>, void>, void>, void>, void>, void>, void>, void>, void>, void>, void>, void>, image_array_type = std::__1::vector, dlib::row_major_layout>, std::__1::allocator, dlib::row_major_layout> > >].

Failing expression was is_learning_problem(images,truth_dets) == true.

matrix test_object_detection_function()

invalid inputs were given to this function

is_learning_problem(images,truth_dets): false

images.size(): 1

-----------------------

Now I get the same error, when I swap out a training image for a testing image. That is if the testing image and result was faulty, I should not get a result of (1,0,0) when I swap out the faulty testing image with a training image...

Any clues...

Thanks!

Cool Davis! :-)

ReplyDeleteHow is test_object_detection_function() supposed to test and image if you don't give it any truth data? If you looked at the documentation for is_learning_problem() (or test_object_detection_function) you would find out it's complaining because you haven't given any truth detections.

ReplyDeleteHi,

ReplyDeleteI'm experimenting with batchprocessing of images. I have found out that this can easily be done by giving a vector of matrix elements to the functor net (assuming net is the network). But I want to alter the adjustment_threshold also.

For a single image I can alter it using:

---

//temporary create a vector to work with iterators

std::vector> images;

images.push_back(img);

// create a tensor with all the data to process (single image)

resizable_tensor temp_tensor;

net.to_tensor(&images[0], &images[0]+1, temp_tensor);

// run the network

net.subnet().forward(temp_tensor);

// convert the output of the network to detections (adjusting the threshold with -0.6)

std::vector dets;

net.loss_details().to_label(temp_tensor, net.subnet(), &dets,-0.6);

---

For batch-processing, I assume the vector "images" should contain all the images, such that a tensor is created that contains all the data of the whole batch. But how can I perform the last step (...to_label())? I assume I have to iterate through the samples of the tensor "temp_tensor" to obtain the detections for the respective image, so call the to_label function multiple times? Is there an iterator that easily loops over the samples that I can use?

Best regards

You give an iterator to a range of std::vectors of rectangles. It's nothing fancy. You should find a C++ tutorial or book that explains iterators and then it will be clear.

ReplyDeleteok, it was quit easy :S , I was making it way to complicated.

ReplyDeleteThanx

This comment has been removed by the author.

ReplyDeleteThanks for the response Davis. Appreciate it.

ReplyDeleteI did see that 'is_learning_problem(images,truth_dets): ' is coming out false. But I dont see why. I generated the testing.xml and training.xml the same way using the imglab tool. That is, I picked the 'truth' box the same way for both training and testing.

Here is what the testing.xml contains:

imglab dataset

Created by imglab tool.

Did it need anything other than the Box definition. Note that when I swap out the two lines of image file and box top from the testing to the training xml, it is ok..

I know the program has found the testing.xml because it knows that there is only 1 image in the testing. That is the initial comments in the code is here:

num training images: 3

num testing images: 1

detection window width,height: 35,45

overlap NMS IOU thresh: 0

overlap NMS percent covered thresh: 0

So it has found the testing.xml, and the image...the image and box sizes are ok, because I can swap them between training and testing...what else is could be going wrong?

Thanks

The xml statements dint come through in the last post, so replacing < with [ in the xml statements

ReplyDeleteThey have

[images]

[image file='/Volumes/hdname/third.jpg']

[box top='271' left='234' width='182' height='262'/]

[/image]

[/images]

What if I want to detect the objects with different aspect ratio?

ReplyDeleteLike cigarette alarm, the aspect ratio of cigarette will vary become different under different views.

Easiest solution I could think of is apply cnn to train the network->apply selective search to find interesting candidate->use the classifier to determine it is cigarette or object X.

I would use a square box right now. Although, in the near future I'm going to add support for multiple aspect ratios into the MMOD tool. But right now it only does one aspect ratio at a time.

ReplyDeleteThanks for your help.

ReplyDelete>I would use a square box right now.

Ok, I will give this a shot, if the results are good I will tell you.

>Although, in the near future I'm going to add support for multiple aspect ratios into the MMOD tool.

Looking forward to that. It would be another big surprise(present) if we could use MMOD to detect objects of different aspect ratio

I check the file loss.h, there are some loss class do not used in the examples. Like

loss_binary_hinge, loss_binary_log_, loss_metric_. What are they for?Under what situations we should try to use them?Thanks

Read the documentation. http://dlib.net/faq.html#Whereisthedocumentationforobjectfunction

ReplyDeleteAlthough loss_metric_ is not documented yet though because I only added it a few days ago and it's still in development.

Ah, thanks. I always jump into header file when I find something confuse, but I cannot found much comments in loss.h(I though the link just the same as header file before I give it a try).

ReplyDelete>support for multiple aspect ratios into the MMOD tool

ReplyDeleteIs it possible for MMOD tool to support detection of different type of objects too(banana, car, pedestrian, book etc)? Like allow the detector output the top 5 error?

Yes, that's something I'm planning on adding soon.

ReplyDeleteI have the same problem as before:

ReplyDeleteError while calling cudnnCreate(&handles[new_device_id]) in file /home/ale/dlib-19.2/dlib/dnn/cudnn_dlibapi.cpp:102. code: 6, reason: A call to cuDNN failed

cuDNN was detected during cmake compilation of dlib:

-- Found cuDNN: /usr/local/cuda-8.0/lib64/libcudnn.so

LD_LIBRARY_PATH also includes ducnn libraries.

CPU version seem to work.

Any idead what could be wrong???

If you google for cudnn error code 6 will you will find out it's the architecture mismatch error. Sounds like your GPU is too old.

ReplyDeleteThanks. I appreciate your time.

ReplyDeleteIs the example code, "dnn_mmod_ex," resource consuming?

ReplyDeleteI used the code to train my dataset, which contains 1000 images,

and found that the program took 5G GPU memory, 6G main memory, and 40% CPU computing for 8 cores.

Is it normal?

How can I adjust the code for using less resource, for example 10% CPU computing.

Thanks.

Deep learning takes a lot of computer power. We wouldn't bother to use GPU computing if that weren't the case.

ReplyDeleteSome parameters that could be changed to use less resources (which I have used to keep the training process from swapping) is making the cropping size smaller, and the amounts of crops in each iteration. Both will however have an influence on the resulting detection accuracy I presume.

ReplyDeleteThank you very much for the nice post!

ReplyDeleteI got this error when I was running the training. Could anyone help me?

>>

Encountered a truth rectangle with a width and height of 19 and 40.

The image pyramid and sliding window can't output a rectangle of this shape.

This is because the rectangle is smaller than the detection window which has a width

and height of 30 and 54.

I had a similar problem before. When you try to train a model that is not square shaped, you have to limit the amount of rotation in annotation augmentation. The command for this can be found in an earlier post.

DeleteHi Davis,

ReplyDeleteWith this deep learning API, can you also get the associated landmarks for the face? If so, is it possible that I use the API to train on a different set of landmarks?

Thanks!

Hi,

ReplyDeleteI have some problems with getting the training working properly. I almost always get a testresult of 1 0 0 (so no detections are found, with an obvious full precision), also on the trainingsset.

Does the training data has to be of some specified format? I have tried different datasets, model sizes, ... Now I'm experimenting with the head annotations of the Town Centre dataset (more or less square annotations). I started with using the full images and using all annotations per image (no luck there).

I saw that the trainingsdata for the facedetector uses all square patches (250x250 or 350x350) with the annotation positioned in the center taking between 23% and 45% of the patches. Is this required? (I tried also this format without success so far).

I also saw that in most of these patches the other annotations are set to ignore, with a few exceptions (which lead to a non-zero NMS threshold), is there some logic there which are ignored?

Is the model-size, cropped patch-size, ... related to size the annotations have in the training data?

best regards

There are no hard coded limits or special formatting requirements in the code. You do have to make training data that make sense in the usual machine learning way though.

ReplyDeleteMan amazing work Davis!

ReplyDeleteDo you have any plans to support GPU for face/object detection? Speeding up the training as step 1 is pretty fantastic. Just curious if you have plans to support OpenCL or something similar for detecting faces in images and reduce the need for clusters and put the power in the hands of the average guys! I plan to look into dlib's core and see how feasible it is to do myself

>Do you have any plans to support GPU for face/object detection?

ReplyDeleteI think dlib already implement the libs by cudnn?

By the way, today I deploy a small example on a windows laptop without cuda support, it crash suddenly(I put cublas64_80.dll, cudart64_80.dll, cudnn64_5.dll, curand64_80.dll in the folder of exe). Is this a normal case?Thanks

can tell me the steps to compile the dlib19.2 with deep learning support

ReplyDeleteUse cmake, this page tells you what to type: http://dlib.net/compile.html

ReplyDeleteAlso, if anyone is getting cuda errors then it's probably because cuda isn't installed correctly on your computer. I would try reinstalling it.

Hi Guys,

ReplyDeleteDidn't have a chance to thank you for this work. This is amazing.

Sorry for a rookie question but I struggle with something I don't understand in your example. Using imglab tool I defined my train/test object xmls but the dnn_mmod_ex keeps saying me:

"Encountered a truth rectangle located at [(10, 51) (23, 70)] that is too close to the edge

of the image to be captured by the CNN features."

Depending on the cropper and mmod_options settings the values in brackets change. It seens pretty self-explanatory comment so I wanted to know which exactly region causes the error. So I endded up with one image in the train xml that has one region in the center of it. Definitelly it is not too close to the edge of the image. I noticed that when the regions are too small this error can appear.

Could you please shed more light on this?

Post this as a github issue and I'll take a look at it. Include all the files necessary to reproduce what you are seeing.

ReplyDelete@Davis King

ReplyDeleteThanks for doing such amazing job! This can detect human face perfectly. However I'd like to further operation with the object detected(say, recognition), but I don't quite understand what operation I could deal with instead of showing overlay. Sorry for this kind of stupid question.Is there any reference suggested?

> However I'd like to further operation with the object detected(say, recognition)

ReplyDeleteFace recognition is much difficult than face detection, if you are interesting about this topic, you can google

"Deep face", "vgg face" and "DeepID".

DeepID provide state of the art performance with relatively small training example.

Hi Davis,

is it possible to switch to cpu operation if the computer do not support cuda?Thanks

What changes have to be made to process a bunch of images as given in comment . Please help

ReplyDeletematrix img;

load_image(img, argv[i]);

// Upsampling the image will allow us to detect smaller faces but will cause the

// program to use more RAM and run longer.

while(img.size() < 1800*1800)

pyramid_up(img);

// Note that you can process a bunch of images in a std::vector at once and it runs

// much faster, since this will form mini-batches of images and therefore get

// better parallelism out of your GPU hardware. However, all the images must be

// the same size. To avoid this requirement on images being the same size we

// process them individually in this example.

auto dets = net(img);

>What changes have to be made to process a bunch of images as given in comment . Please help

ReplyDeleteNever tried it(do not have a strong gpu), but I guess this should work.

std::vector imgs; //this is acronym to dynamic array in c++

size_t constexpr batch_size = 10;

while(img.size() < 1800*1800)

pyramid_up(img);

//move will take the resource of img, do not move it if you want to reuse img

imgs.emplace_back(std::move(img));

if(imgs.size() >= 10){

auto dets = net(imgs);

imgs.clear()// reset vector

}

Yes, that's what you do. Pack them into a std::vector and give them to the network.

ReplyDeleteCMake will automatically use the CPU if CUDA isn't available. But you can toggle it by going into cmake-gui and setting the DLIB_USE_CUDA option.

>CMake will automatically use the CPU if CUDA isn't available.

ReplyDeleteI think you are talking about compile time?

The problem I met is at runtime, on the target host do not support cuda, the program will crash if I compile the app with cuda. Is it possible to switch to from gpu mode to cpu mode if the target host do not support cuda?Thanks

No, you have to compile for a particular target architecture.

ReplyDeleteHi Davis,

ReplyDeleteA quick question - what negative loss during training means in dlib implementation.

I see that in loss.h:82 we have

const float temp = 1-y*out_data[i];

so negative loss means that out_data is bigger than 1 ? Overfitting or jump outside local mimimum? Shall we prevent it by adding:

while ((trainer.get_learning_rate() >= 1e-4) && (trainer.get_average_loss() > 0))

? Or maybe decrease of iterations without progress threshold? Add more train examples so that train starts with bigger loss?

Hi Davis,

ReplyDeleteThank you for such a nice tool. All the CNN tools I have explored so far this is easiest one.

I built dlib 19.2 with cuda 8.0, cuDNN 5.1, visual studio 2015 update 3 and without openCV. Then I tried "dnn_mmod_face_detection_ex" which is working perfectly. But getting error while running "dnn_mmod_ex". For training and testing I use the face dataset provided with dlib.

Here is the error message,

num training images: 4

num testing images: 5

detection window width,height: 40,40

overlap NMS IOU thresh: 0.0781701

overlap NMS percent covered thresh: 0.257122

step#: 0 learning rate: 0.1 average loss: 0 steps without apparent progress: 0

Error while calling cudaMalloc(&data, new_size*sizeof(float)) in file C:\AtiqWorkSpace\dlib_CNN\dlib-19.2\dlib\dnn\gpu_data.cpp:191. code: 2, reason: out of memory

Any idea why this is happening.I ran the tool in Amazon EC2 machine which should have sufficient memory.

Hi Rasel,

ReplyDeleteTry to reduce number of crops in:

cropper(150, images_train, face_boxes_train, mini_batch_samples, mini_batch_labels);

Thanks Rafal.

ReplyDeleteReducing number of crops resolve the memory issue. I tried 80 and able to train and test but the test result is very poor. No face detection at all. I am confused whether it is because of the number of crops (mini_batch_size) or any other issue.

Hello Davis,

ReplyDeleteI want to use max margin object detection for face detection. For this, I tried modifying dnn_mmod_ex.cpp file that is provided in the examples. However I would like the detector to detect more faces, even at the cost of some false positives. I understand that I have to change the adjust_threshold parameter, but where do I change it from? I tried to read the documentation but could not find anything related to this. Any help would be much appreciated!

If you want to find faces use one of the pretrained face detectors that comes with dlib. They example programs with the word face in the name show you how to do it.

ReplyDeletecan Max-margin loss apply to caffe?

ReplyDeleteI really need to train my own DNN model for this, as the faces I'm detecting are from grayscale low resolution images and the pretrained model does not work well for this. I tried going through example programs you suggested but could not find any settings for the threshold that lets me have more detections. Any particular example you are talking about?

ReplyDeleteHi Rasel, did you try increasing the iterations without progress value to something like 1000?

ReplyDeleteHi Adithya,

ReplyDeleteThanks for your suggestions. I did not increase the number of iterations without progress. I am running the training again and this time I am going to use the network configuration and all the parameters describe in pretrained face detectors example.

Hi There,

ReplyDeleteHow can I change the aspect ratio? Tried to change the some settings but 2:1 ratio always there.

I'm processing the images via random_cropper_ex. Actually some images are red in same random crops and not in some other crops. I have resized the images and made the width 600px by keeping the aspect ratio.

ReplyDeleteTrying to figure out which images is better for dnn training, but still couldnt figure out yet.

Hi,

ReplyDeleteI trained a model with the same CNN architecture of dnn_mmod_face_detection_ex.

And the parameters,

mmod_options= 40*80 [The objects to be detected are rectangular not square like face]

iterations_without_progress_threshold= 8000

max_rotation_degrees =15

total_crop =50

Training was successful. But got following error message while testing,

Error detected at line 591.

Error detected in file C:/AtiqWorkSpace/dlib_CNN/dlib-19.2/dlib/dnn/cuda_dlib.cu

.

Error detected in function void dlib::cuda::affine_transform(dlib::tensor &, con

st dlib::tensor &, const dlib::tensor &, const dlib::tensor &).

Failing expression was A.nr()==B.nr() && B.nr()==src.nr() && A.nc()==B.nc() && B

.nc()==src.nc() && A.k() ==B.k() && B.k()==src.k().

A.nr(): 556

B.nr(): 556

src.nr(): 5385

A.nc(): 148

B.nc(): 148

src.nc(): 1994

A.k(): 16

B.k(): 16

src.k(): 16

For testing I used the example dnn_mmod_face_detection_ex as I trained the model using the CNN architecture of this example.

Don't train with affine layers. You should use the bn_con (the batch normalization layers) instead.

ReplyDeleteHI Davis,

ReplyDeleteI'd like to use tracking after object is detected in a video, and which the position of object is necessary. I wonder how to find those attributes like positions or size etc.

Thank you for your contribution.

Hi Davis, thanks for the amazing job!

ReplyDeleteIm reading this post and i think it can help me. Im new to this and im a little lost.

I need to detect the tongue if is down, up, left or right. If i can detect like the landmark face detector with points it will be nice too.

Can you give me some advice of how achieve this?

Hello Davis ,

ReplyDeleteI have a very serious issue with the code.Actually , I have made a python wrapper of the code using Boost Library so that I can access the c++ code from python.from python i will be sending a frame as nd_array , my cpp program will change the ndarray to Mat and subsequently to dlib image format. The code runs perfectly on CPU but on a system with GPU it gets segmentation fault when executed from python.

I tested the code on a system without the GPU and it is running fine.As i compiled my code on the other system with GPU , the line " auto dets=net(img) or auto dets=net(imgs) "

gives a segmentation fault , I am not sure but there is some issue coming from dlib/dnn.h.

"

// Author: Samarth Tandon (samartht@cdac.in)

// License: BSD

// Last modified: Dec 1, 2016

// Wrapper for most external modules

#include

#include

#include

#include

// Dlib Includes

#include

#include

#include

#include

#include

#include

// Opencv includes

#include

// np_opencv_converter

#include "np_opencv_converter.hpp"

using namespace dlib;

namespace py = boost::python;

using namespace std;

// ----------------------------------------------------------------------------------------

template using con5d = con;

template using con5 = con;

template using downsampler = relu>>>>>>>>;

template using rcon5 = relu>>;

using net_type = loss_mmod>>>>>>>;

// ----------------------------------------------------------------------------------------

py::list detect(const cv::Mat& in) {

cv::Mat frame ;

frame = in.clone();

string file="/tmp/frame.jpeg";

const char dNN_path[100]="/home/cdac/workspace/Dlib_face_detector/mmod_human_face_detector.dat";

net_type net;

deserialize(dNN_path) >> net;

size_t constexpr batch_size = 1;

dlib::rectangle cords;

// make a standard vector imgs

std::vector> imgs; // RGB compatible with Open Cv use for gray_scale

matrix img; // RGB Pixel compatible with Open Cv for gray_scale

cv::imwrite(file,frame); // save file in tmp

cv::waitKey(10);

dlib::load_image(img, file); // load file from the tmp to conver into dlib format

imgs.emplace_back(std::move(img));

auto dets=net(imgs);

int i=0;

py::list test_list;

py::tuple arr,arr1;

for (auto&& frms : dets)

{

auto&& d =frms;

//std::cout<<typeid(d).name()<<endl;

for (auto&& d : frms)

{ cords=d;

cv::Rect roi (cords.left(),cords.top(),cords.width(),cords.height());

arr=py::make_tuple(roi.x,roi.y,roi.x+roi.width,roi.y+roi.height);

test_list.append(arr);

//cv::rectangle(frame,cvPoint(roi.x,roi.y),cvPoint(roi.x+roi.width,roi.y+roi.height),CV_RGB(255,0,0),3,3);

//cv::imwrite(file,frame);

}

}

return test_list;

}

// Wrap a few functions and classes for testing purposes

namespace fs { namespace python {

BOOST_PYTHON_MODULE(detector_module)

{

// Main types export

fs::python::init_and_export_converters();

py::scope scope = py::scope();

// Basic test

py::def("detect", &detect);

}

} // namespace fs

} // namespace python

"

Python code

"import cv2

import detector_module

img=cv2.imread("path of image")

open_cv_module.detect(img) # detect is the function

"

Segmentation fault ..

Post a github issue with a minimal but complete C++ program that reproduces the crash. Include everything necessary to run it. Don't post a python example since it's very likely the bug is in something you did wrong in the python C API rather than anything to do with dlib. So if you can't reproduce the crash in a pure C++ program then the bug isn't in dlib.

ReplyDeleteHey Davis Thanks for the reply .. PLease can you figure out why this error is coming

ReplyDeleteError detected at line 1718.

Error detected in file /home/surveillance6/dlib-19.2/dlib/dnn/cpu_dlib.cpp.

Error detected in function void dlib::cpu::tensor_conv::operator()(dlib::resizable_tensor&, const dlib::tensor&, const dlib::tensor&, int, int, int, int).

Failing expression was filters.nr() <= data.nr() + 2*padding_y.

Filter windows must be small enough to fit into the padded image.

.. please help

Hi, I am playing a little bit with this library, great work! I have a question.

ReplyDeleteCan the dnn_mmod_ex example classify more than one class? In other words, if the training set contains images with different labels, is it able to classify them? Or, do I have to implement a specific loss layer for my classes?

Thanks.

loss_mmod only outputs one class. However, the extension to support multiple labels isn't very difficult. I'm going to update it in an upcoming release to add such support. But right now it's just one label at a time.

ReplyDeleteOk, thank you very much for your work. I will wait for the update.

ReplyDeleteHi Davis,

ReplyDeleteIs there any reason for only supporting CUDNN 5 or more? I think it is awesome if dlib can support earlier version of cudnn like cudnn v 2 which is used by jetson tk1

Earlier versions of cuDNN have a different and incompatible API and are also missing important features. Moreover, from looking at nvidia's web page, it sure looks like current versions of cuDNN can be used with the jetson tk1, as is claimed here for instance: https://developer.nvidia.com/embedded/jetpack

ReplyDeleteHello Davis ,

ReplyDeleteIs there any way in which i can give directly an cvMat image to the network i.e. dets(net). It takes some dlib format is there any way in which without conversion i can give cv:Mat image to train the network as i am taking the data through the RTSP or Video stream .

Yes, you can use any inputs you like. Just create a new input layer that takes the input object you want to use. It's not complex and only a few lines of code. The documentation explains how to do it in detail.

ReplyDeleteHello Sir,

ReplyDeleteI studied the file dnn_mmode_ex.cpp. inside the comments you mentioned about the training stage and xml files.

1) Why you have created an xml file also for test images?

2) Does the program train again itself, once we run it more than once?

3) How can I insert real time live video (such as webcam or USB3 camera), instead of test images as input?

4) GPU cards are expensive, I have plan to use FPGAs. Do you have any suggestion for this procedure?

This comment has been removed by the author.

ReplyDeleteThank you Davis for really exciting work!

ReplyDeleteCould you please clarify me some moments?

I tried your dnn_mmod_face_detectrion_ex to obtain processing timings and got following using GPU (and Cuda 7.5):

processing one image in a batch (composing vector with one 640x480 image) takes ~90ms;

processing 10 images in a batch (10 640x480 images) takes ~760ms (76ms per image).

1. How did you obtain 45ms per single image and 18ms per image in a batch of images?

2. What minimal face size can be detected without upsampling and image?

Thank you

I used a titan x GPU, maybe your's is slower. There is also CPU processing that has to happen. Maybe you didn't turn on compiler optimizations?

ReplyDeleteThe minimal face is about 80x80, as explained in the example program.

Hi Davis,

ReplyDeleteReally awesome work.

Just wanted to ask if this object detector can also handle rotations for the objects its trained on, or do i manually add rotated samples?

Thanks

It can handle rotations. Although, there need to be examples of rotated objects in your training data for it to learn about it.

ReplyDeleteDavis, doesn't it help if we call e.g. cropper.set_max_rotation_degrees(180.0), before the training starts?

ReplyDeleteI thought this would extract rotated training samples? (But of course rotated only around the "z" axis.)

I'm working on a problem that I know is rotation invariant (around the "z" axis), and I thought setting max_rotation_degrees to 180.0 should be enough to handle this. Did I miss something?

Of course, to get e.g. faces rotated around the "y" axis, rish195 still needs to collect actual training data (or at least sample projections from 3D measurements =).

Thanks, and yes i meant rotation around the z axis. Will be collecting samples for rotation around y.

ReplyDeleteIf you use the random_cropper (or any other tool) to add rotations into your training data then you will have rotations in your training data ;)

ReplyDeleteRight :). And it seems that dnn_mmod_ex.cpp adds rotations up to +/- 30 degrees, which is the default value defined in random_cropper.h.

ReplyDelete>I would use a square box right now.

ReplyDeleteI give square box a try, the results are far from good, training and testing results are 1 0 0.I guess it is because square boxes include a lot of negative background, this make the trainer hard to differentiate positive and negative objects.

To find out my suspicious is true or false, I train the detector with different aspect ratio, this time training progress and results become much better.

Would next version of dlib(19.3) support multi aspect ratio, multi label, and allowed us to use pre-trained model to train object detector?

The shape of the box shouldn't matter since the detector we are talking about is a point detector. That is, the area of the image it looks at doesn't have anything to do with the shape of the box. The detector just scans a DNN over the image and finds the center of an object. The area of the image the DNN looks at is entirely determined by the architecture of the DNN, not the shape of the boxes in the training data. So what you are saying about looking at negative background isn't right. If you get bad results when changing box sizes then it's probably just some mistake in your data or setup or how you apply data augmentation. Who knows.

ReplyDeleteI will be upgrading the detector to support multiple aspect ratios and object categories. This will probably happen in dlib 19.4.

> That is, the area of the image it looks at doesn't have anything to do with the shape of the box.

ReplyDeleteMaybe we are not talking about the same thing?

The meaning of the square box contain too many negative object. Assume the aspect ratio of the object is 1 : 4, to make the dlib trainer work,

I draw the bounding box with aspect ratio as 4 : 4 == 1 : 1, that means this bounding box has 3/4 area is negative object.

It is like drawing the bounding box of faces 4 times larger == the boxes contains 3/4 negative objects compare with original bounding boxes, now the box only got 1/4 is the object we want to detect(face).

>I will be upgrading the detector to support multiple aspect ratios and object categories. This will probably happen in dlib 19.4.

Looking forward to the update :)

I could not succeed on installing dlib to python on windows 10.

ReplyDeletei get the error messages below.

can anybody help me?

D:\Yazilim\dlib\dlib-19.2>python setup.py install --yes DPYTHON3

running install

running bdist_egg

running build

Detected Python architecture: 32bit

Detected platform: win32

Removing build directory D:\Yazilim\dlib\dlib-19.2\./tools/python/build

Configuring cmake ...

-- Building for: NMake Makefiles

-- The C compiler identification is unknown

-- The CXX compiler identification is unknown

CMake Error in CMakeLists.txt:

The CMAKE_C_COMPILER:

cl

is not a full path and was not found in the PATH.

To use the NMake generator with Visual C++, cmake must be run from a shell

that can use the compiler cl from the command line. This environment is

unable to invoke the cl compiler. To fix this problem, run cmake from the

Visual Studio Command Prompt (vcvarsall.bat).

Tell CMake where to find the compiler by setting either the environment

variable "CC" or the CMake cache entry CMAKE_C_COMPILER to the full path to

the compiler, or to the compiler name if it is in the PATH.

CMake Error in CMakeLists.txt:

The CMAKE_CXX_COMPILER:

cl

is not a full path and was not found in the PATH.

To use the NMake generator with Visual C++, cmake must be run from a shell

that can use the compiler cl from the command line. This environment is

unable to invoke the cl compiler. To fix this problem, run cmake from the

Visual Studio Command Prompt (vcvarsall.bat).

Tell CMake where to find the compiler by setting either the environment

variable "CXX" or the CMake cache entry CMAKE_CXX_COMPILER to the full path

to the compiler, or to the compiler name if it is in the PATH.

-- Configuring incomplete, errors occurred!

See also "D:/Yazilim/dlib/dlib-19.2/tools/python/build/CMakeFiles/CMakeOutput.log".

See also "D:/Yazilim/dlib/dlib-19.2/tools/python/build/CMakeFiles/CMakeError.log".

error: cmake configuration failed!"

Try using pip install dlib, since that should download the precompiled binaries for windows. But if you want to compile it yourself you need to install visual studio, which looks to be your problem.

ReplyDeleteHey Davis,

ReplyDeleteI'm using your pretrained model to detect faces in ~1920x1080 images in a C++ project.

I've found the memory usage of this model to be extraordinarily high, especially compared to

other neural networks.

Previously I had multi-threaded the FHOG detector and it would use <1GB with 4-5 threads each with their own instance of the FHOG detector.

With the new DNN-based mmod detector I'm finding that the memory usage approaches 4GB with no multithreading and just a single instance of the network. The network is running on CPU, no AVX, but SSE2 is enabled.

I'm using the dn_mmod_face_detection_ex.cpp and the provided mmod_human_face_detector.dat model to replicate this issue and using visual studio 2015's diagnostic tools to measure the process memory.

Considering the model file is <1MB, and the image I'm using is <1MB, I can't fathom why it would be using this much memory. Do you have any ideas on what is going on and how I might fix this or reduce the memory usage?

That can happen. The CPU code does the convolutions by building a toeplitz matrix and shoving it through BLAS. That will result in a bigger amount of RAM usage. There is definitely room to make the CPU side faster, like by using the SIMD enabled convolution routines the HOG code uses, but I haven't gotten around to it since everything I'm doing is on the GPU.

ReplyDeleteThanks for the response.

ReplyDeleteI have my own C++ framework for running torch models natively without RAM usage issues and it runs faster.

I'm not familiar with toeplitz matrices or how/why you're using it, but I'll try to dive into your implementations and see if I can find ways to improve it.

It would be great if I could get this detector running in my project without it using up all of the system's RAM.

This is the class that does the convolutions: https://github.com/davisking/dlib/blob/master/dlib/dnn/tensor_tools.h#L769

ReplyDeleteIt calls to a cuda version, that just calls cuDNN, or it calls the CPU version. All you need to do is rework the CPU version, which isn't a huge deal to do, if you only support the simple cases.

HI Davis , Thanks for the help , solved the issues with your help . My concern is now how to improve the performance. I have configured the code to accept frames from video stream using opencv and processing it on GPU.Can you suggest me what parameters can be tuned for performance improvement for ex. Pyramid size is one of the parameter.

ReplyDeleteHi Davis, I am using NVIDIA GeForce GTX 970, and configured the settings. i.e, using CUDA with cuDNN and AVX_INSTRUCTIONS=ON.

ReplyDeleteSo, when I am executing the program with Upsampling the images, it is giving 100% accuracy but taking a lot of time (per image). Whereas when I am executing it without Upsampling, it is consuming less time approx 50ms but accuracy is very less.

What configurations should I do in order to improve the accuracy?

I want one more clarification that, whatever results you have got were using Upsampling or without Upsampling?

Davis, I have a requirement to train custom object detectors. It used to take less than a min to train HOG based detectors earlier, but though the DNN detectors are superior, they take about 3+hrs for me, on even a GPU nVidia 1080. I am training with about 35-40 boxes as training data as an experiment. Is this normal?

ReplyDeleteSecondly, with the earlier HOG based detectors, I could stack multiple detectors and fire them all at once on the same image and I would get matches based on which ones matched. Is there any documentation on how do achieve this using DNN based method?

Say I'd like to train for about 20-25 different object types, and be able to detect them on my dataset, what would be the most optimal way to use dLIB to achieve this? Also what kind of training time and test (fps) performance can I expect?

Yes, the CNN training will take several hours. Possibly more depending on the network architecture and other settings.

ReplyDeleteThere is no function in dlib to quickly run different CNN detectors as a group. Each CNN must be run on its own.

For really small datasets you are still probably better off with the HOG detector. Although it's possible that setting up a special CNN that uses HOG for the first few layers might be better, both in terms of speed and training data requirements, than either the regular HOG detector or CNN detector discussed in this blog post. I haven't tried such a thing, but it's definitely something I would consider if dlib's regular HOG detector isn't powerful enough but the full CNN is too slow.

See this blog post for speed numbers for the detector.

This comment has been removed by the author.

ReplyDeleteThis comment has been removed by the author.

ReplyDeleteThis comment has been removed by the author.

ReplyDeleteHi Davis,

ReplyDeleteThanks for you work!

One question, what's the license for the dnn face detection model (from the link provided in the example)?

All the dlib model files are in the public domain. Do whatever you want with them.

ReplyDeleteHello Davis , I am having issue which says

ReplyDelete"Error while calling cudaMallocHost(&data, new_size*sizeof(float)) in file /home/surveillance6/dlib-19.2_2/dlib/dnn/gpu_data.cpp:181. code: 2, reason: out of memory"

Can you please explain why this issue is coming .The Graphics card I am using is Nvidia Geforce GTX 1080 , 8GB Ram , Cuda version 8.0.

Because you are trying to use more memory than your card has? ;)

ReplyDeleteThis comment has been removed by the author.

Delete:) True , but the issue is reported only after 990 iterations . I am taking the frames from opencv and as the frame count reaches to 990 this error is generated . So why it is happening only after 990 iterations only , even though I am cosuming more memory it should have reported me at the starting stage only

DeleteHi,

ReplyDeleteI'm having some trouble getting this example to run on my machine : I keep getting a 'Bad allocation' error. I resorted to commenting out lines one by one, and I managed to track the error to the line 'auto dets = net(img);'.

Now, I'm a complete C++/CMake novice, so it's quite possible that I did something wrong. However, I haven't got a clue how to investigate this further. Can I toggle something to give me more verbose error messages? Or did I miss something while building? Any nudge in the right direction would be much appreciated.

Bad allocation means you are running out of RAM

ReplyDeleteAh, I see.

ReplyDeleteI did compile to 32bit, thinking that would be enough. Switching to 64bit fixed the problem.

Thanks!

>I will be upgrading the detector to support multiple aspect ratios and object categories. This will probably happen in dlib 19.4.

ReplyDeleteDo you plan on making this detector get state of art results in voc2007+voc2012, coco object detection challenge too? Sorry if I am asking too much, dlib detector already provide us decent api, rich document, able to train reason detector with a few of training images, easy to build and cross platform. Thanks for your hard works

I expect to be able to get state-of-the-art results. But we will see. Expectation is not a guarantee of future performance :)

ReplyDeleteI have a few questions after reading your paper about MMOD.

ReplyDelete- Are you still using a sliding window classifier now you switched from HOG to CNN?

If yes, this means you use y* = argmax sum(f(x,r)) as scoring function, right?

- I was wondering how you go from this scoring function of a window to the coordinates of the bounding box. Does your window changes in size (using an image pyramid) and the highest scoring window == bounding box coordinates, or do you somehow look in the highest scoring window for the right coordinates of the bounding box?

Thanks for reading.

Yes, it's a sliding window over an image pyramid.

ReplyDeleteThanks for your reply. Just for clarification, so the highest scoring window == bounding box coordinates?

ReplyDeleteYes, basically.

ReplyDeleteHello,

ReplyDeleteNice job.

Have you measured the accuracy on other objects also (rather than faces)?

This is a CNN tool, so it work swell for many other things. CNNs are the current state of the art in object detection.

ReplyDeleteOkay, Thanks.

ReplyDeleteis this would be a single class object detector or it can detect multiple object classes?

Single class

ReplyDeleteI am trying to run dlib's dnn_mmod_ex.cpp to train a face detector with just 5 images as given by them. Its mentioned in the example that the learning rate will get smaller as the time passes. Its already been more than 24 hours since my code is running (with SSE4 instructions enabled and in release mode) and its more than 450 steps, but still the learning rate is not getting smaller and its not converging. What should I do?

ReplyDeleteYou need to use CUDA with a decent graphics card or it's going to take forever.

ReplyDeleteHi Davis

ReplyDeleteThanks for great work.

It is possible to test the detection without a graphics card and CUDA, even if it is slow? You say 370ms. In some applications that is quite adequate.

Yes, it's possible, that's what I mean by "run on the CPU".

ReplyDeleteTried with executable of a normal dlib compilation on Linux and the program is stucked with a blank windoe, no title.

ReplyDeleteWill look again.

Hello Davis,

ReplyDeleteThank you very much for your great work and thank you for sharing it :)

I am running the face mmod in a Linux machine with a NVIDIA Titan X GPU (Maxwell version) and the code is taking over 600ms to process a single image. I have read that in your case this code takes 45ms when you process the images one at a time.

I am using CUDA 8 with cuDNN 5.1 and just that (no OpenCV, no sqlite or any other), the compilation was ok (it found CUDA and cuDNN correctly) and I have checked with the nvidia-smi command that the example, while was running, was using the GPU. So, my question is: are you are using any extra library or procedure in order to get better times? Because the time I am getting is 10 times slower that yours and I don't understand why this could be happening.

The runtime is a function of image size. I bet you are using bigger images than I mentioned regarding those times.

ReplyDeleteThank you for your fast answer :)

ReplyDeleteI am using the "2008_002506.jpg" image that is provided in the "faces" folder with the "pyramid_up(img)" disabled.

Maybe you are timing it wrong. Don't time the whole program. There is significant CUDA startup time.

ReplyDeleteI start to measure the time the line after the deserialization of the "mmod_human_face_detector.dat" and I finish measuring before the " cout << "Hit enter to process the next image." << endl;" and I had disabled all the windows and image displays.

ReplyDeleteDon't just measure one call. There is cuda context startup overhead. You have to measure many calls.

ReplyDeletehello Davis ,is there any way to increase the speed of the current CNN based Dlib face detection . I tried reducing the pyramid size from 6 to 4 , but with the cost of loosing faces but i am fine with it.Please suggest something . I am working on Nvidia Jetson Tx1 board and have NVIDIA GTX 1080. I am trying to process image of size 4MP.

ReplyDelete"Don't just measure one call. There is cuda context startup overhead. You have to measure many calls."

ReplyDeleteThat was the reason, I was just trying over one image and I didn't know that Cuda needed that significant amount of time to start their processes. Thank you very much!!

I run the test on ubuntu16.04, and dlib is compiled with cuda. GPU is GTX 1060.

ReplyDeleteI use the image in faces folder, and I disable the pyramid_up. I record the execution time in the below way:

"

cout << "start" << endl;

int start = getCurrentTime();

auto dets = net(img);

int end = getCurrentTime();

cout << "finish" << end - start << endl;

"

The execution time is about 1~2 seconds, which is much slower than you mentioned. And I have "measure many calls"

I am so confused, and have completely no idea why the test is slow.

I realized that I was not compiled with GPU because I use g++ command to compile the example. I use cmake to recompile the example, and the execution time is less than 100ms.

ReplyDeleteDo you know any way I can compiled with GPU using g++ command?

Hi Davis,

ReplyDeletethere is any way to decrease the sliding windows size for the face detector ?

Regards,

@Davis, great work with dlib! I have researched online for various libraries out there, and dlib is one of the best.

ReplyDeleteI am training a new object detector to be used on a smartphone app. Your comment about performance in dnn_mmod_face_detection_ex.cpp caught attention. "[CNN model] takes much more computational power to run, and is meant to be executed on a GPU to attain reasonable speed". Does it mean I should not use CNN model for a smartphone app and instead use HOG based model shown in the face_detection_ex.cpp?

Yes, you would probably want to use the HOG based methods as they are a lot faster. Although what you select really depends on the requirements of your application.

ReplyDeleteThanks for your prompt reply!

ReplyDeleteHi,

ReplyDeleteI have a problem regarding the compilation of dlib - using the variant "Compiling C++ Examples Without CMake". I will detail :

I have my own opencv project in which I include source.cpp. Everything seems to work fine if I do not want to build with CUDA support. If I define DLIB_USE_CUDA, I get lots of undefined references to some "cuda::functions". I also saw that in the dnn folder there are sources which define the namespace "cuda::"; here I can find "the undefined function references."

Should I modify the source.cpp to include those files from dnn?

Could you give me some hints how to build?

Note: If I build dlib standalone with CMAKE I do not have errors in dlib, but I need to use it in my opencv project.

Hi,

ReplyDeleteI found a solution.(it was cumbersome) In case someone would like to have a standalone built I will detail the steps (some steps might be redundant, but anyways I put everything I did in order to work):

1) made a correction inside a CUDA header:

/usr/include/cudnn.h

-commented a header line and inserted the one below

//#include "driver_types.h"

#include

2) made a correction in: dlib/dnn/cudnn_dlibapi.cpp

Added one extra parameter: CUDNN_DATA_FLOAT - see below

cudnnSetConvolution2dDescriptor(l->convDesc, padding, padding, l->stride, l->stride, 1, 1, CUDNN_CROSS_CORRELATION);

+ cudnnSetConvolution2dDescriptor(l->convDesc, padding, padding, l->stride, l->stride, 1, 1,CUDNN_CROSS_CORRELATION,CUDNN_DATA_FLOAT);

3) go in dnn directory and build the *.cu file:

nvcc --gpu-architecture=compute_52 --gpu-code=compute_52 -std=c++11 `pkg-config --cflags opencv` -I/usr/local/cuda/include/ -DDLIB_USE_CUDA --compiler-options "-Wall -O0 -g " -c ./cuda_dlib.cu -o cuda_dlib.o

4) build the rest of the cpp files(note that I put both defines USE_CUDA - you can check in the sources which of them is correct; if you do not want that, you can let them like this):

nvcc -ccbin g++ -std=c++11 -O3 -DDLIB_USE_CUDA -DLIB_USE_CUDA -I. -I../../ -c ./*.cpp -I/usr/local/cuda/include -lcudnn -lcublas -lcudart -lpthread -lX11 cuda_dlib.o

5) cp *.o

6) In your project include source.cpp then link with the object file you have copied.

7) Build the application- all the dnn functions will call CUDA primitives so you will run on GPU.

I could check that - due to the speedup of the training. At the beginning, with 8 cores - on cores only, I stood 40 mins and there was no progress from the first step. Now I can see the fast processing on the GPU and multiple iterations were saved.

I had a typo at the 1) step. So the 1) step was:

ReplyDelete-commented a header line and inserted the one below

//#include "driver_types.h"

#include

One more thing:

ReplyDeleteat step 3) you should be in the ./dnn folder so everything from dnn is built and the obj will be copied.

That is not a sensible thing to do. If you find yourself editing header files for cuda or dlib, you are going down a bad path. Really you should just use cmake, you can easily use cmake with opencv.

ReplyDeleteOr if you really don't want to use cmake for some reason, you can just compile dlib and install it. You go into the dlib folder and run cmake, then do make install.

You mentioned 370ms for the CPU detector. Was this on the 4 image model or the 6k model? I'm running the face mmod example in CPU, but with 10+ seconds per image. Am I compiling it wrong or something?

ReplyDeleteI don't recall. But the speed difference between the two example programs isn't very large. You are seeing this speed problem because either you haven't enabled compiler optimizations, or you aren't using a BLAS library like the Intel MKL, or you are timing the whole program rather than the detector. Also: http://dlib.net/faq.html#Whyisdlibslow

ReplyDeleteI will try again thanks!

ReplyDeleteDavis the thing is the upsampling in the example that causes the exponential increase in running time for the net, which probably makes sense. Keeping original sizes I get close to your timings even with openblas.

ReplyDeleteThis detector at this stage is even better than most cloud-based detectors out there. Excellent work.

Thanks, glad you like it :)

ReplyDeleteYeah, upsampling the image is going to make it run slower for sure.

Hi,

ReplyDeleteI've got a question about run time initialization.

Let's say we have a network such that:

using net_type = loss_multiclass_log<fc<10,

...

function_x() {

net_type net2(num_fc_outputs(15));

...

}

So I can change the number of outputs of the net dynamically. But as you can see

the variable net is local to the function scope.

So let's say I have a class then, in that class I have a method that trains the model. (function "train" will retrain the model if new subjects were added, so that means the net output layer will be changed, it is also possible to change other layers) I want the "net" variable to be a member of that class and not locally defined in the train function.

In the train function the net variable will have the output layer changed then the network will be trained.

In another method I will use the "net" for prediction.

So I want something like this "net2.num_fc_outputs(15)" in a desired function

How can I update a global "net" variable if the templates are statically defined?

As I said the model changes - today I have x objects , after some days I could have x + N.

If you have a new network you just trained you can assign it to another network object, just like you would with any other C++ object. E.g.

ReplyDeletethis->net = my_new_net_i_just_trained;

Hi,

ReplyDeleteI think it's simple:

I define a class member pointer such as:

net_type *net2;

Then further I will use the "new" operator together with the type specifier:

net2 = new net_type(num_fc_outputs(my_new_number_of_outputs));

You don't need to use a pointer. Just assign it like any other object. E.g.

ReplyDeleteint a = 0;

int a_new_int = 4;

a = a_new_int; // no pointer needed.

Hello Davis

ReplyDeleteThank you for such great library.

Are you going to make gpu hog face detector?

Thanks, I'm glad you like dlib.

ReplyDeleteI'm not planning on writing a GPU version of HOG.

That's too sad.

ReplyDeleteI am trying to increase face detection performance to 30 fps on 1280 x 700 video.

Is it possible to use some kind of mask with dlib face detector? Use skin detecor first and then process part of image?

You can crop out part of an image based on any method you like and then run the detector on that image crop. How you do this is up to you.

ReplyDeleteDoes this network require the same input image size? I mean, if you pack the image pyramid levels into one image, they cannot be the same size, right?

ReplyDeleteInput sizes can be anything.

ReplyDeleteThanks for the great post. Where can I find the FDDB evaluation software? Is there a feature in dlib for evaluating object detection algorithms?

ReplyDeleteLook at the first result in google for FDDB.

ReplyDeleteHello Davis.

ReplyDeleteI want to create detector which finds all trucks on image using your dlib library. So I am trying to use this http://dlib.net/dnn_mmod_ex.cpp.html example. When I train my detector should I put in training dataset all possible trucks in all possible poses? Could you please give me a hint in what direction should I move? Thanks

I could use this library in Java?

ReplyDeleteThere aren't any java bindings that I know of, but you can easily make some using SWIG.

ReplyDeleteYes, that's part of the output. Look at the API documentation for the object_detector for the details.

ReplyDeleteWhenever i tried to load the 'mmod_human_face_detector.dat' model .it says " File format not recognized

ReplyDeletecollect2: error: ld returned 1 exit status" so how to get rid of this problem??

Hello Devis,

ReplyDeleteI am trying to train the CNN. I tried to edit the dnn_mmod_ex1.cpp program, the CNN architecture code is as follows:

template using con5d = con;

template using con5 = con;

template using downsampler = relu>>>>>>>>;

template using rcon5 = relu>>;

using net_type = loss_mmod>>>>>>>;

Also,

mmod_options options(face_boxes_train, 40, 40)

Training is successfully done. But, at the time of testing, its showing following error:

Error detected at line 501.

Error detected in file ../dlib/all/../dnn/cpu_dlib.cpp.

Error detected in function void dlib::cpu::affine_transform(dlib::tensor&, const dlib::tensor&, const dlib::tensor&, const dlib::tensor&).

Failing expression was ((A.num_samples()==1 && B.num_samples()==1) || (A.num_samples()==src.num_samples() && B.num_samples()==src.num_samples())) && A.nr()==B.nr() && B.nr()==src.nr() && A.nc()==B.nc() && B.nc()==src.nc() && A.k() ==B.k() && B.k()==src.k().

I also tried to use bn_con (batch normalization layer) instead of affine layer, but the result is totally unexpected.

Please help me.

I'm facing a similar problem, but I'm using the CUDA code.

ReplyDeleteUsing the provided dlib dataset + xmls ; The only changes I made besides the changes stated in the face detector documentation was to change batch to be 50 instead of 150 as my cuda memory (4GB) is not high.

cropper(50, images_train, face_boxes_train, mini_batch_samples, mini_batch_labels);

The generated dat file is 24MB and it results in the below error upon running the detection using it.

It seems that 1.3 as an average loss is the best I can get , is this good enough?

===========================

Saved state to mmod_sync

step#: 22954 learning rate: 0.0001 average loss: 1.36111 steps without apparent progress: 2360

step#: 22985 learning rate: 0.0001 average loss: 1.32451 steps without apparent progress: 2399

step#: 23016 learning rate: 0.0001 average loss: 1.36526 steps without apparent progress: 2425

step#: 23047 learning rate: 0.0001 average loss: 1.35642 steps without apparent progress: 2451

step#: 23078 learning rate: 0.0001 average loss: 1.34853 steps without apparent progress: 2490

Saved state to mmod_sync_

done training

Error detected at line 1007.

Error detected in file ..../dlib-19.6/dlib/dnn/cuda_dlib.cu.

Error detected in function void dlib::cuda::affine_transform(dlib::tensor &, const dlib::tensor &, const dlib::tensor &, const dlib::tensor &).

Failing expression was A.nr()==B.nr() && B.nr()==src.nr() && A.nc()==B.nc() && B.nc()==src.nc() && A.k() ==B.k() && B.k()==src.k().

A.nr(): 567

B.nr(): 567

src.nr(): 477

A.nc(): 159

B.nc(): 159

src.nc(): 134

A.k(): 16

B.k(): 16

src.k(): 16

==============================================

I have no idea what you guys did since you didn't post any code.

ReplyDeleteHi Davis,

ReplyDeleteThanks for the reply.

I am trying to train the CNN using sample program "dnn_mmod_ex1.cpp" (without changing any parameter). But after training is finished, its not detecting any face. I have taken a training dataset of 300 images.

What should I do to train the CNN properly? Do I need to increase number of training image dataset or iterations_without_progress_threshold should be increased to 8000?